Evaluation of eLearning for Effective Practice Guidebook/Class Projects/Dana and Louise - project page

Contents

Dana and Louise - Evaluation Plan

Introduction

Provide an overview of the intentions and design of evaluation project, and introduce major sections of the plan as well as the primary people involved in writing the plan.

Louise

In 2009, the evaluator enrolled into the Graduate Certificate of e-learning and in the Design paper of this qualification, an on-line Conception to birth component was developed and posted on the MIT Learning Management System for student learning. The first time this component was run was in Semester one, 2010 and it became evident that there should be some modification if the students were to use this component and learn from it. In semester two and a new group of students, a modification has been carried out.

The purpose of this evaluation is to evaluate the modified e-learning component of the Lifespan development course “Conception to Birth” to see if it is an effective learning tool.

Dana

Development of an online project about a topic of interest in a field of practice is required to complete the Graduate Certificate in Applied eLearning successfully.

For this purpose a few online lessons have been developed on eMIT to support Nursing and Foundation students to have a better understanding of the cellular basis of life. The lessons examine a general overview of a “typical living cell” with regards to structure, types and functions. The main idea of the lessons is to do a step by step activity named “the smiley face approach”.

This evaluation plan describes the background and purpose of this project and the type of the audience and target groups. Furthermore it will explore the principal concerns and questions that need to be considered. The report will also examine the various methods used to collect feedback, data and information on how to appropriately and effectively evaluate the project.[no need to mention the report at this stage. --Bronwynh 06:02, 8 October 2010 (UTC)]

Louise and Dana

Effectiveness evaluation is concerned with the total elearning package. That is the design that is chosen to present the material, the usability of the navigation tools, tools chosen to engage the learner in the learning process, the learning uptake and the outcomes achieved according to the learning objectives. Reeves and Hedberg (2003) consider a mixed method approach to be effective using both qualitative and quantitative methods of collecting data. In this way a triangulation of findings can inform revision and modification of an elearning instructional design. Triangulation is concerned with more than one way of collecting data to evaluate the effectiveness of the on-line learning component, e.g. survey or interview using structured and/or unstructured questions (Gratton & Jones, 2004, p. 108). Reeves and Hedberg (2003) consider that there are six facets of instructional product evaluation. These are learner needs assessment, formative evaluation during the design process, effectiveness evaluation, the overall impact of the elearning package, how maintenance will be managed and finally a review of the total instructional process. These projects (Smiley Face and Conception to Birth) focus upon one facet only, that is "effectiveness evaluation".

Formative evaluation according to Flagg (as cited in Reeves and Hedberg, 2003) is “the systematic collection of information for the purpose of informing decisions to design and improve the product” (p. 139). Hence the formative evaluation is to ascertain how the students are experiencing the conception to birth component of the lifespan and if there needs to be modification in the design, tools chosen to engage the learner and how well they are achieving the learning objectives as set.

[I am not sure why you are referring to this as formative evaluation when you have already said it is to be an effectiveness evaluation. This type of evaluation is summative - that is - done at the end of the course to see if the design is effective for learning ....and all the other things you mention such as enabling learning objectives to be met. It appears you are confused by the terms.]

Formative evaluation includes quantitative data as per results of the 20 question multichoice test but as this was voluntary, not all the students participated. [Again formative evaluation is used incorrectly - is the multichoice test a formative or summative assessment? The results may indicate whether students have an understanding of the material and indicate whether the design is effective, but you need to call the multichoice quiz an assessment not an evaluation. --Bronwynh 06:28, 8 October 2010 (UTC)]

Qualitative data is obtained using the evaluation sheet (see attached) devised in relation to the conception to birth component where students can respond to the questions asked and make comments. Qualitative data is also obtained from the discussion comments in response to focused questions on the content to be learned. There are methods to evaluate the quality of discussion but in the case of Conception to Birth, the effectiveness evaluation is focusing on the summative evaluation only which is based upon a multichoice test.[again use the correct term - summative assessment not evaluation.--Bronwynh 06:28, 8 October 2010 (UTC)] Summative evaluation [yes this is correct as effectiveness evaluation is summative, but th emultichoice test is a summative assessment. I hope you can see the difference. --Bronwynh 06:28, 8 October 2010 (UTC)] is concerned with the learners’ achievement of the learning objectives as set out for the conception to birth component. This took the form of a summative multichoice test where only ten questions of a total of forty related to the conception to birth component of the lifespan.

Background

Present any information which is needed to provide the reader with an understanding of the background of the eLearning that is being evaluated and the rationale.

Louise

The design of the component is directed by the Blackboard Learning Management System (LMS) and uses the format the students have been introduced to through EMIT. Students are expected to access the LMS through their User Login and password that they receive on enrollment. They are also taught as part of their orientation how to navigate around the system. They are informed that all announcements, course information, course content, and assessment information is to be accessed via the LMS. Students become familiar with the LMS set up and expect to see information presented in the design of the system. They can access the system asynchronously any time day or night.

From Conception to Birth is designed around a story of a family expecting a new baby. The family consists of father, mother, an elder son and baby daughter and an expected new baby entering the family.

Students are directed to a course text from which the story of the family acts as a background for numerous questions that trace the conception to the zygote, embryo and fetal phase of the pregnancy. They are given online resources that illustrate the development process, the time line involved and critical phases with questions to assist the students to find out the information they need to for their learning.

Additionally, a number of technological tools are used to assist them in their learning process. They have a number of ‘matching’ exercises to learn key terms, Quizlet is used for learning new terminology, a crossword is used to link concepts to definitions and a summative multi-choice test is used to see how they were learning. The summative multi-choice test is worth 10% of their total mark for the paper and comprises 20 multi-choice questions. From the total enrolment only four students out of 148 failed to reach a pass mark and the highest mark was 19/20 and the lowest 9/20.

A discussion board was also set up for students to discuss the component as they learnt the material. In the original exposure there were 148 students enrolled in the course. To manage the large number of students, they were placed in 16 discussion groups. Instructions were given verbally, demonstrated in class and placed within the conception to birth component with an appropriate access button. Each group was asked to choose a leader who would take the week’s discussion from the group and place the key response themes on a class wiki. The evaluator who was also the facilitator of the course would then check on each of the wiki’s to gain an overall impression of how students were learning and more importantly, how students were accessing and using the systems that were available to them to assist them with their learning. The discussion board did not contribute to the overall course mark for students. The discussion activity was broken up into four weeks with questions to drive the discussion each week. The writer kept a check on the discussion activity of the students.

A summative evaluation was undertaken at the conclusion of the component. Students were asked to evaluate their experience with the component and sadly only four students completed the evaluation. These four were positive.

From the observation, the discussion area of the learning component was not utilised well with very few students using it and the leadership and class wiki was only taken up by one leader. It was decided on observations made, that the component would be modified for the new enrollees for semester two.

Modifications made have included more instructions given frequently to students through the Emit LMS; checking in class to see how the students are managing. The leadership for group activity has been scrapped along with the wiki but the discussion groups have continued with the questions being announced and published weekly to guide the students. The facilitator checks on all groups twice per week to see how the activity is being managed. To date the evaluator noticed that this monitoring is making a total difference to the uptake. The students are enthusiastic and the evaluator monitors how frequently individual students access the discussion. The very new students are beginning to ask their own questions apart from the formulated ones which are very encouraging. The summative multi-choice has been changed to a formative test which is for their own monitoring of their learning but the students have been informed that questions related to the component will appear in their summative test to contribute to 20% of their total mark for the paper. Additionally the students have been informed that the group who has the most participation on the discussion board will get chocolate fish. This really has got the students going.

[Louise this is a brilliant explanation and really describes the issues you are facing and why the evaluation is necessary. I love the pic. Great work!--Bronwynh 04:02, 29 September 2010 (UTC)]

Dana

Learning advisors at the Learning Support Centre (LSC) offer assistance in a wide range of areas to help students progress in a friendly educational setting to be able to succeed and achieve academic goals independently.

Foundation for Nursing students and to a lesser extent first year Nursing students at the beginning of “Semester One”, receive a variety of scientific terms and do a number of complex topics.

A living cell can contain a variety of microscopic structures that cannot be found in other living cells. This is due to a number of factors including locations they are found in and function/s they are designed for (Marieb & Hoehn, 2010). This concept can be really perplexing for those students at the beginning of their academic study. This perplexity is particularly experienced by older students who have left school for a long time.

A simple approach has been developed to assist students to have a better understanding of the living cells in terms of shape, structure and contents. The approach was named "the smiley face approach" and has been perceived by many to be very easy, understandable and successful.

Purposes

Describe the purposes of the evaluation, that is, what you are evaluating and the intended outcomes.

Louise

The purpose of the evaluation is threefold:

(a) to determine whether e-learning can satisfactorily replace the traditional lecture method of transmitting theoretical information to new students of nursing;

(b) To determine whether the conception to birth component was designed in an effective manner for student learning?

(c) To assess the effectiveness of the online component for student learning through the use of summative evaluation [I presume you mean summative assessment?--Bronwynh 06:33, 8 October 2010 (UTC) ]

Aim:

The aim of the evaluation is to see if the modified e-learning component of the Lifespan development conception to birth is an effective learning tool for new students of nursing. [Great!]

Dana

Aim:

The principal aim of this evaluation is to explore the extent of effectiveness of the e-learning component of the project including the smiley approach to the cellular basis of life.

Literature Search

Louise and Dana

To evaluate effectiveness of the lifespan component “from conception to birth” it is necessary to research the total on-line e-learning package to include the learning environment, course design and the learning outcomes to be achieved and the tools that are used in the educational delivery. At the conclusion it is important to evaluate how the learner experienced the course and whether or not the learning outcomes were achieved to the desired level that would demonstrate effective learning. As a beginning point, particular studies provided some direction into the evaluation of effectiveness.

Questions that arose concerning learning styles of students identified Kozub (2010) who questioned whether web based courses should be designed to specifically cater for a range of learning styles in the instructional design. An assumption has been held generally that web based learning environments with a wide range of technological innovations is superior as a means for educational delivery. An ANOVA analysis of the relationships between business students’ learning styles and effectiveness of web based instruction used Kolb’s Learning Styles Inventory to measure the learning styles of a group of business students and to allocate them to a control group and an experimental group. The research design differed only slightly between the control group and the experimental group with both being exposed to web-based instruction modules.

The study showed that neither the student learning style nor the instructional design had any impact on the overall outcome grade of the students either from the control nor experimental group nor was their degree of overall satisfaction of the course affected. The research pointed up the need for more investigation to identify the factors that are important in instructional design for e-learning.

Herrington, Reeves and Oliver (2006) identified that it is important to provide authentic on-line learning tasks that provide a synergy among the learner, task and the technology. The authors emphasise the importance of authentic learning to support knowledge construction and meaningful learning. The researchers point out that authenticity is a key factor in developing on-line instructional material.

Questions that arise concerning student support for e-learning courses identified a case study conducted by Hansen, Nicholls, Williams, Monk and Baker (2008) using E-Learning guidelines for New Zealand (SO8) and focusing on Māori students. The research focused on whether or not students received targeted guidance, study skills and the necessary support to promote effective e-learning. The researchers cited Sewart (Sewart, Keegan & Holmberg, 1983) who emphasise that all students require a full range of support. To offer e-learning courses to allow the student the flexibility of not attending face to face classes does not remove educational institutes’ responsibility to provide a full range of student learning support services.

Dulohery (n.d.) suggests that in the United States, the focus has been on teacher effectiveness and there is a gap between the desired levels of achievement and the actual level of achievement by the learner. To overcome this difficulty an online classroom quality improvement (oCQI) programme has been developed to continually evaluate, modify and improve online teaching and learning. The process rests on student and peer teacher involvement throughout a course and at the conclusion.

[excellent range of literature to support your choice of evaluation.--Bronwynh 04:08, 29 September 2010 (UTC) ]

Limitations

Outline any limitations to the interpretation and generalizability of the evaluation. Also describe potential threats to the reliability and validity of the evaluation design and instrumentation.

Limitations to the interpretation and generalizability of the evaluation can include:

Louise

(a) The evaluation of the Conception to Birth Lifespan is conducted in English which can be difficult for students who speak primarily other languages

(b) 103 students participate in the summative evaluation of the ten question component of the summative test on Conception to Birth This is a large sample but is specific to the "Conception to Birth" component of the total Lifespan. However it has the potential to be widened to a larger sample as the informational content is a basic component of all Nursing Curricula and therefore could have a wider generalizability.

Threats to the reliability and validity of the evaluation design and instrumentation is determined by the proximity of the evaluating instruments to the content to be learned.

Reliability is determined by the summative test for the on-line Conception to Birth component of the Lifespan reliably testing the information that students were required to learn and this test can be repeated to gain similar results with succeeding students undertaking the Conception to Birth Component of the Lifespan. [Also how reliable is the approach to the effectiveness evaluation itself - using a mixed of data collection methods provides triangulation which contributes to reliability, and also validity.]

Validity refers to the summative test being able to accurately test the information to be learned [has this test been used more than once - this in itself can indicate how valid the test is - whether consistent results are obtained with each class. Great work here in this section.--Bronwynh 06:50, 8 October 2010 (UTC)]

Dana

(a) The generalizability of the evaluation of the “the smiley face approach” can be threatened by limited number of students participating in the evaluation process. This is due to a number of reasons. Apparently many Nursing and Foundation for Nursing students are mature students and have a number of other obligations and commitments and might not have enough time to participate in the survey.

Nevertheless, it has the potential to be extended to a larger sample once the piloted programme is evaluated.

(b) The evaluation also requires competence with specific anatomical terms that must be learned before participating in drawing the smiley face. [the use of more than one data collection tool (triangulation) can overcome threats to reliability and validity of data due to small numbers of participants. Sometimes the depth of information you obtain rather than the mumber of responses to a survey is the more important indicator - though you are wise to be wary of making predictions. However, generalizing is possible if the results are described clearly, because readers can then make up their own mind about whether they might get the same results if they used the Smiley Face. ]--Bronwynh 06:50, 8 October 2010 (UTC)

Audiences

Specify all the primary and secondary audiences or consumers of the evaluation.

Louise

1. Students undertaking 722.520 Foundations of Nursing (Lifespan Conception to birth) component are the primary audience.

2. The evaluator and facilitator of this course.

3. Secondary sources other lecturers involved in the teaching of the Lifespan component.

4. Bachelor of Nursing Teams One and Two Co-ordinator.

5. Programme Leader of Bachelor of Nursing programme.

Dana

1. The primary audiences are MIT students who are enrolled in:

• Nursing.

• Foundation for Nursing.

• Health Studies.

2. Staff and lecturers who are interested in health studies and e-learning.

Decisions

This section is probably the most difficult, but it should be included if the evaluation is to have meaningful impact on decision-making. Both positive and negative outcomes should be anticipated.

Louise

Outcomes expected from the modification of the online component:

Positive outcomes would be:

1. Students participate fully in the discussion board;

2. Students take advantage of the formative test for their own learning and there is at least a 50% participation rate as measured;

3. Students demonstrate 100% achievement of the ten related questions to the conception to birth component of a forty question summative test worth 20% of their grade for the Foundation of Nursing paper;

4. In the light of evaluation from students on the effectiveness of the Conception to Birth component of the Lifespan Curriculum, resources are allocated from the departmental budget to provide release time to further modify the Conception to Birth component.

5. Students state that they are happy with the on-line teaching resource for the Conception to Birth Component of the Lifespan and their achievement results support this decision.

Negative Outcomes could be:

1. Poor participation (>30%) in the discussion board

2. Poor participation (>30%) in the 20 question formative test on the conception to birth component of the Life Span teaching

3. Poor pass rate (>30%) on the 10 question component of the lifespan 40 question summative test

4. Students prefer face to face lecture method of educational delivery rather than on-line teaching.

From the total process – further modification to the component a conception to birth of the lifespan teaching according to the demonstrated performance of the students and the feedback on their evaluation of the component.

Dana

Whether to continue to use the smiley approach to the cellular basis of life in its present form or to modify it.

Expected outcomes:

1. Students whole heartedly embrace the smiley face as a successful learning tool.

2. Students achieve (>60%) on bioscience questions related to the components of the cellular structure in their test.

3. Students can readily recall the components of the cellular structure at random.

Negative outcomes:

1. Students do not find the smiley face helpful to their learning.

2. Students do not achieve (>60%) on bioscience questions related to the components of the cellular structure in their test.

3. Students find it difficult to recall the components of the cellular structure at random.

Questions

List your 'big picture' evaluation questions and sub-questions here. A key element of a sound evaluation plan is careful specification of the questions to be addressed by the evaluation design and data collection methods. The clearer and more detailed these questions are, the more likely that you will be able to provide reliable and valid answers to them. Note: The eLearning Guidelines can assist here.

Louise

Aim/Purpose: The overall aim and purpose of the evaluation is to determine if the modified e-learning component of the Lifespan development conception to birth is an effective learning tool for new students of nursing.

Evaluation big questions:

The purpose of the evaluation is threefold

Q 1: Is the conception to birth component designed in an effective manner for student learning?

Sub-questions:

- How usable did students find the resources?

- How did students use the discussion board for learning?

- What were the perceptions of the students about the online component?

- How did students use the formative learning tools to assist their learning?

Q 2: Did the formative and summative test results indicate that students had an understanding of the material in the online component?

Sub-questions:

- What did the formative test results demonstrate in relation to the way students had used the online material?

- How did the summative test results demonstrate students had achieved an adequate level of understanding about the subject?

Students Perceptions as gained by the summative evaluation tool specific to the on-line Conception to Birth component of the Lifespan.

Question 1

Were you able to follow the orientation instructions for the course? Yes No

Question 2

Was access via Emit easy? Yes No [Louise - you would get richer data if you ask participants to rate their responses. You don't need to do this for all questions - but if they are all Yes/No answers you will not be able to ascertain the degree. For example, this would be better with a 4 point Likert-type scale: For example Access to eMit is easy - strongly agree, agree, disagree, strongly disagree. --Bronwynh 06:59, 8 October 2010 (UTC)]

[Hopefully you can reword the other questions so you get ratings - this will give you a much clearer picture of where the difficulties lie. ]

Question 3

Were the instructions for your learning clear? Yes No

Question 4

Were you able to access the prescribed text? Yes No

Question 5

Were you able to access additional readings related to the topic? Yes No

If Yes did you find the reading material interesting? Yes No

If Yes did you find the questions easy to answer from your text book reading? Yes No

Question 6

Were you able to complete the formative assessments using the instructions provided? Yes No

Question 7

Were you able to access the multi-choice formative assessment at the required time? Yes No

Question 8

Was the timing for the course realistic? Yes No

Question 9

Was the timetabling of activities helpful? Yes No

Question 10

Was the group learning activities using the "Discussion Board" helpful to your learning? Yes No

If Yes were the questions asked relevant to your key learning goals? Yes No[I would ask them to explain their answer - why they were relevant this will give you richer data. Have a few more open questions as this will give you more information. --Bronwynh 06:59, 8 October 2010 (UTC)]

If Yes did your fellow students assist you with your learning? Yes No

Question 11

Was teacher support sufficient to facilitate learning? Yes No

Question 12

Was teacher feedback helpful throughout? Yes No

Question 13

Have you found the e-learning environment effective for your style of learning? Yes No

Question 14

Was group participation effective? Yes No {this definitely needs to be an open question so you get some useful qualitative data.--Bronwynh 06:59, 8 October 2010 (UTC) ]

Any other comments?

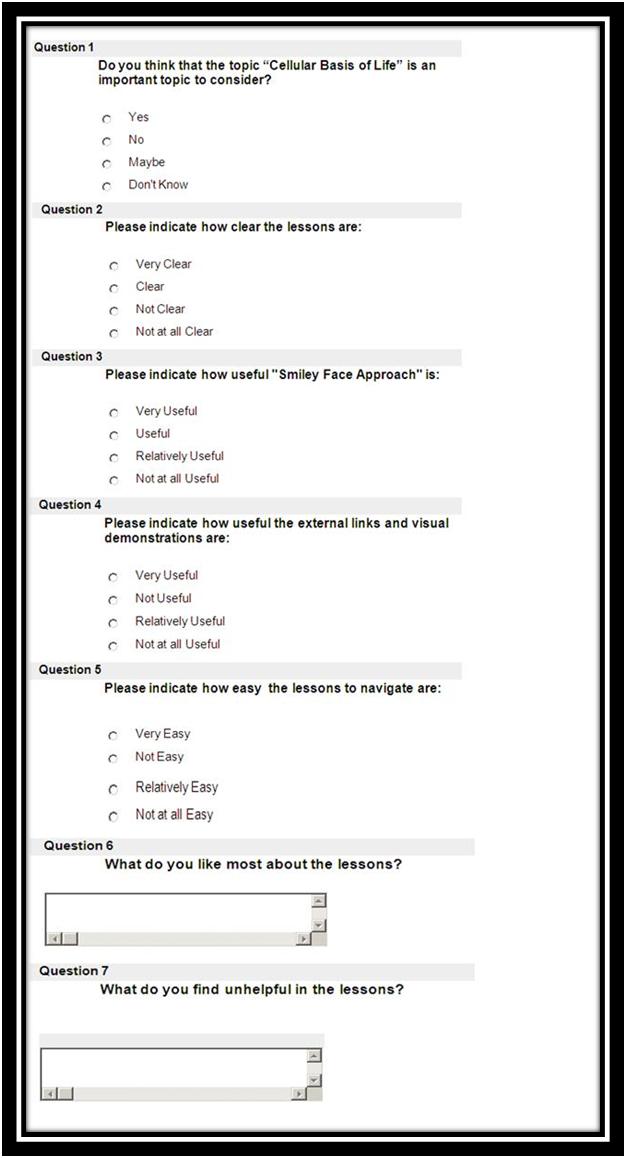

Dana

1. How effective was the eLearning component of the project for learning in terms of usefulness, ease of access and engagement of students.

Sub-questions: to be respondent to in the survey to measure effectiveness

- How beneficial did students find the lessons?

- How useful did the students find the materials and external links?

- Which features did students believe were easy to navigate?

- What worked very well?

- How do the resources and activities influence the level of engagement by students?

- Why was the topic “Cellular Basis of Life” important to students' learning?

- Why is the timing of the online lessons important for students' learning?

2. What good practice can be identified and developed further to help others in the field of e-learning and related area (Winter, 2010)?

3. What can be done better in terms of design, development and delivery (Winter, 2010)?

Methods

Describe the evaluation design and procedures. Match the method to the purposes and questions of your evaluation, and the phase of eLearning, e.g., analysis, design, development, implementation.

Louise

Data collection methods:

Design of evaluation

It is proposed to use a range of data collection methods to evaluate the effectiveness of the on-line conception to birth component of the lifespan curriculum content. These are as follows:

1. An evaluation form has been developed as part of the component Conception to Birth of the LIfespan and students are asked to complete the evaluation form.{what does this form ask?--Bronwynh 07:17, 8 October 2010 (UTC)]

2. Lecturer assessing the discussion board regularly over a two week period to assess participation and to assess the quality of the responses to the questions asked. Five discussion board results will be assessed for quality of response. [ are you still going to do this? perhaps mention that if there is time this will be done for this evaluation project. --Bronwynh 07:17, 8 October 2010 (UTC)]

3. Lecturer assessing the formative test participation and results of the students who participated.

4. Lecturer assessing the summative questions as part of a formal test of the lifespan component of the curriculum to include conception to birth (10 questions)[ is it the results you are assessing?--Bronwynh 07:17, 8 October 2010 (UTC)]

5. Students completing an evaluation of the conception to birth component of the lifespan content[make sure you get feedback from me on the questions first. --Bronwynh 05:17, 29 September 2010 (UTC) (See evaluation form)]

Dana

- Online survey

- Face to face interviews: An independent lecturer will interview lecturers and BN students who visit the LSC centre to find out the extent of effectiveness of the lessons.[ need to see some interview questions please. --Bronwynh 07:17, 8 October 2010 (UTC)]

- Summative assessment: BN students who have visited the blackboard course will be asked to draw and label the structure of a living cell.

- Feedback from lecturers and experts: Lecturers are asked to access the discussion board to assess participation and to assess the quality of the responses and feedback. [ not sure why this is here, because it is not mentioned previously - why is this useful information and how will this measure effectiveness of the smiley face? you can explain in this section if you like.--Bronwynh 07:17, 8 October 2010 (UTC) ]

Sample

Specify exactly which students and personnel will participate in the evaluation. If necessary, a rationale for sample sizes should also be included.

Louise

Sample

1. 101 students

2. 6 lecturers

Dana

1- Nursing students who visit the LSC

2- Foundation for Nursing students who visit the LSC

3- Lecturers from Foundation, Nursing and Health Studies, MIT

4- Two learning advisors from the LSC

5- Two librarians from MIT

Instrumentation

Outline all the evaluation instruments and tools to be used in the evaluation. Actual instruments, e.g., questionnaire, interview questions etc., should be included in appendices.

Logistics and Timeline

Outline the steps of the evaluation in order of implementation, analysis, and reporting of the evaluation, and include a timeline.

Louise

Implementation

Instrumentation

1. Evaluation form

2. Multichoice questions – formative

3. Summative

Timeline = one semester 20 weeks.

1. Discussion board open for four weeks – the month of August, 2010

2. Analysis of responses on discussion board for 12 groups of 10 students twice per week and responding to the students 8 hours per week = 40 hours

3. Monitoring hits and performance on formative test of participating students –August to September 3 - 4 hours per week

4. Summative test results = 8 hours

5. Evaluation of component per evaluation form yet to be determined – 4 hours

6. Approximately 56 hours estimated in the evaluation process

Dana

• Online survey design – August (already started)

• Survey implementation: August, September and October

• Individual formative assessment: September & October

• Keeping a blog: September (already created)

• Daily blog updating for documentation

• New tools: Design and Implementation: Perhaps ongoing

Budget

Provide a rough costing of the evaluation process. For example, the evaluator's time and costs, payment of other participants etc.,

Louise

56 hours at $45 per hour = $2,520

Dana

40 hours at $45 per hour = $1800

Future Development

Dana

1- More effective tools for collaboration, interactions and discussions

2- More relevant topics and external links

3- Effective team building (educators, lecturers and experts in the fields of e-learning and bioscience at MIT)

4- Collaboration with other departments such as the Technology Learning Centre and library.

References

Dulohery, Y. M. (n.d.). Online classroom quality improvement: A measure of online learning and teaching effectiveness. Journal of Instruction Delivery Systems, 23(2), 21-25.

Gratton, C. & Jones, I. (2004). Research methods for sport studies. New York: Routledge.

Hensen, Jens, J., Nicholls, L., Williams, M., Monk, W., & Baker, P. (2008). Ensuring Māori students receive targeted guidance, study skills and the support required to promote effective e-learning experiences: a case study concerning e-Learning guideline S08. Waiariki Institute of Technology - Targeted guidance. From e-Learning guidelines for New Zealand. From Guidelines Wiki. Retrieved from http://elg.massey.ac.nz/index.php?title=Waiariki_Institute_of_Technology_-Targeted on September 5, 2010.

Herrington, J., Reeves, T.C., & Oliver, R. (2006). Authentic tasks online: A synergy among learner, task and technology. Distance Education, 37(2), 2233-247.

Kozub, R. M. (2010). An ANOVA analysis of the relationships between business students’ learning styles and effectiveness of web based instruction. American Journal of Business Education 3(3), 89-98

Marieb, E., & Hoehn, K. (2010). Overview of the cellular basis of life. Human anatomy & physiology. San Francisco, CA: Pearson Education, Inc.

Reeves, T. C., & Hedberg, J. G. (2003). Evaluation: Interactive learning system. NJ: USA. Englewood Cliffs.

Winter, M. (2010). Second life education in New Zealand: Evaluation research final report. Retrieved September 23, 2010, from http://wikieducator.org/images/1/13/Slenz-final-report-_milestone-2_-080310cca.pdf